This is my contribution to "Digital Unconscious – Nervous Systems and Uncanny Predictions!” Autonomedia 2021: Eds: Konrad | Becker, Felix Stalder You can get a nice printed copy directly from the publisher, Autonmedia.

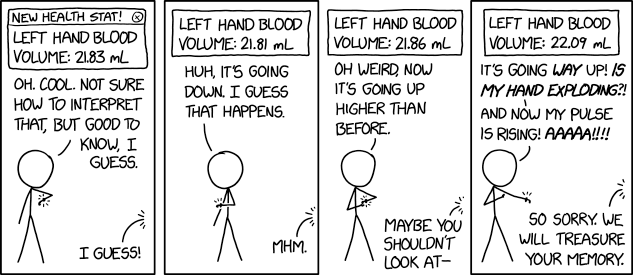

Source: xkcd.com

Driven by the need to manage large-scale, complex systems in real-time, the notion of rationality shifted during the cold war. Rationality was no longer seen as something that required the human mind, but rather as something that was requiring of large, technical systems (Erickson et al. 2013). While the enlightenment idea of rationality emphasized reflectivity (as in ‘know thyself’) and moral judgments (as in Kant’s categorical imperative), this new notion emphasized objectivity (in the form of numbers) and the strict adherence to predetermined rules (in the form of check-lists, chains of command, and computational algorithms).

The study of the self has long resisted this shift. Throughout the 20th century, psychology, with almost all its variants based on individual introspection, remained the predominant mode of learning about oneself (Zaretsky 2005). Within the domain of psychology, the exception, of course, was behaviorism, which was strictly based on external observation and disregarded all accounts of mental states. Its impact on the study of the self was rather limited, due to its primary use being focused on learning about others rather than oneself, as well as its methodological and political groundings having been quite controversial. Its main proponent, BF Skinner, was, as Noam Chomsky (1971) put it, “condemned as a proponent of totalitarian thinking and lauded for his advocacy of a tightly managed social environment”.

With the advent of cheap, small digital sensors and processors, this shift is now also taking place within the domain of individual self-knowledge. Gary Wolf, a prominent advocate of the ‘Quantified Self’, introduced the idea to the wider public in 2010 as follows:

People do things for unfathomable reasons. They are opaque even to themselves. A hundred years ago, a bold researcher fascinated by the riddle of human personality might have grabbed onto new psychoanalytic concepts like repression and the unconscious. These ideas were invented by people who loved language. Even as therapeutic concepts of the self spread widely in a simplified, easily accessible form, they retained something of the prolix, literary humanism of their inventors. From the languor of the analyst's couch to the chatty inquisitiveness of a self-help questionnaire, the dominant forms of self-exploration assume that the road to knowledge lies through words. Trackers are exploring an alternate route. Instead of interrogating their inner worlds through talking and writing, they are using numbers. They are constructing a quantified self. …

The contrast to the traditional therapeutic notion of personal development is striking. When we quantify ourselves, there isn't the imperative to see through our daily existence into a truth buried at a deeper level. Instead, the self of our most trivial thoughts and actions, the self that, without technical help, we might barely notice or recall, is understood as the self we ought to get to know. Behind the allure of the quantified self is a guess that many of our problems come from simply lacking the instruments to understand who we are. Our memories are poor; we are subject to a range of biases; we can focus our attention on only one or two things at a time. We don't have a pedometer in our feet, or a breathalyzer in our lungs, or a glucose monitor installed into our veins. We lack both the physical and the mental apparatus to take stock of ourselves. We need help from machines.

Wolf makes three arguments here. First, people and their personal knowledge about themselves are unreliable. Partly because human consciousness – the capacity to see, understand, and recall the world – has a very narrow bandwidth. Most events are either simply ignored, forgotten or relegated to the subconscious. And partly because humans are vain, dishonest, and prone to self-delusion – quickly editing memory to suit self-image, rather than their actual self. In other words, they are unreliable sources of self-knowledge, even in the best of circumstances.

Second, psychology is not only terribly old-fashioned (‘a hundred years ago…’), but also somewhat elitist (‘literary humanism’). Thus, the previously dominant mode of self-knowledge was not only based on untrustworthy sources, it was also using outdated methods, hampered by an irredeemable grain of snobbery. In short, it’s of no use. A new way of knowing is proposed in its place, one whose very existence depends on machines, whose ability to record the world is not only more extensive but also unquestionably correct since objective events are now registered without omission or distortion. From this, a new sense of self emerges. One that is directly amendable to being improved.

After all, so goes the argument, once one is confronted with the cold, hard truth of not having walked enough in a day (that is, making less than the proverbial 10,000 steps), there is no longer an excuse for not having done what is right. The proof of one’s fallibility is incontrovertible. There is a sense of peer pressure here (like in therapeutic sessions of, say, AA), except the pressure now comes from unrelenting, infallible machines. And they help us to become the best version of ourselves.

Since this argument burst into the public a decade ago, self-tracking has been widely taken up, with estimates ranging as high as 33% of the global adult population using either a dedicated fitness tracker or a smartphone app for that purpose. While this number seems to include everyone who owns a smartphone (because of preinstalled apps), a more robust survey puts the number of US adults actively self-tracking at about 20%. Either way, self-tracking has become a mass movement for technologically literate middle-classes and an important way in which people relate to themselves. Anxieties around Covid-19 are now providing a further boost to this practice, not the least through projects like ‘the quantified flu’.

My purpose here is not to reiterate the well-known criticism of this neo-behaviorist approach as either obstructing the view on the real self (Wallace 2010) or being overly normative (Neff and Nafus 2016); as an instance neo-liberal self-optimization (Catlaw and Sandberg 2018) leading to more external control and exploitation (Moore and Robinson 2016), or superficial ‘solutionism’ (Morozov 2013). Rather, I want to think about the limits that this technological view of the self entails and how these limits produce what I call ‘known unknowables’ that feed into a general sense of paranoia, if left unchecked.

Known unknowables

Early in the second Iraq war, Donald Rumsfeld famously spoke about ‘known unknowns’, things we know to exist but have not knowledge about them yet, and ‘unknown unknowns’, things that we don’t even know that they exist. I want to add a further category: ‘known unknowables’. These are things that we know exist, but with that knowledge comes the realization that we cannot know them in sufficient detail, in other words, we know that they remain unknown.

In quantified systems there are three types of known unknowables, which lie beyond the horizons of visibility created by these systems. As the horizons move forward, so do these unknowables. Thus, they are not a residual category that will eventually disappear as the systems improve. They are a constitutive part of it, directly related to the types of knowledge produced, and the conditions that produce them. Indeed, it's safe to assume that as datafication expands, so do the unknowables they produce.

The first ‘known unknowable’ is the data itself. There is a premise underlying all promises of quantification: A data point is a simple number directly derived from a complex external event, representing it in a way that is unambiguous and thus self-explanatory. The number on the pedometer represents the number of steps taken, which in turn represents the sum of my walking, which in turn represents an important factor of my health, and the number of 10,000 is the line I need to cross to be healthy. It is not difficult to see that this premise stands on very shaky ground. First, data is never ‘raw’ but always ‘cooked’, that is, it is not a simple natural fact, but rather the process of cultural manipulation and fabrications that create, as much as indicated, reality (Gitelman 2013). Let alone the meaning of data. The figure of 10,000 steps per day stems from a Japanese marketing campaign launched in 1964 to sell pedometers. It’s a great number, pure and objective in its de-contextualization, easy to memorize, and large enough to feel like a gratifying accomplishment. Contemporary health research has found this number to be something between arbitrary and meaningless (Hammond 2019). Second, since the very virtue of these machines is to exceed our sensibilities, and the virtue of this data to represent our ‘non-conscious’ acts – those too small, too ephemeral, too numerous to be brought into our awareness without creating cognitive overload and paralysis – we cannot check on the veracity of the data without the help of other machines, which create their own sets of data, sending us down the rabbit hole of infinite recess. Thus, we cannot know what the exact relation is between the data and the events that it is supposed to present.

What does the number on the pedometer really indicate? Unlikely the exact number of actual steps taken, despite the misleading precision of the number on the display. It’s not just the question of accuracy of measuring. It is also the arbitrary conventionality of the measuring category. A step counted is not actually a step taken (whatever that would be), but a threshold in some model of ‘steps’, and this model reflects what is accessible to the technical senses. Also, in reality, not all steps are equal. Yet, two small steps are counted as two events even if they move me the same distance as one large step. At best, the data represents some form of approximation. But how approximate this approximation is and what to do with the number, remains unknowable. And we know it.

In practice, this problem is pragmatically sidelined by the efficacy of the data. Similar to the shift from causation to correlation, it’s not the explanation but the effects that matter. As long as the data works, it doesn’t matter how it comes about. But determining efficacy is more complicated when it comes to long-term predictions, particularly for something as vague as personal well-being. Here, the nagging question of what the data is actually supposed to tell me about myself is harder to contain. I’ll come back to this point.

The second ‘known unknowable’ is the knowledge that quantification does not create. Personal experience has the advantage of giving the impression of being holistic. When I wake up in the morning and feel rested and ready to get up, I can say with sufficient confidence that I slept well. Almost by definition, I have no conscious knowledge of my sleeping (Fuller 2018) – apart from, possibly, my dreams, though that’s a different domain – however, it’s nevertheless not difficult to render a useful judgment on its overall quality that settles the question. Quantified knowledge is different. Its precision relies on reduction.

Even if we could overcome the problems of reliability/readability indicated above, the numbers are derived from measurement of only one precise aspect, immediately indicating other aspects that haven’t been measured but might be important. Of course, if technically possible, these can and should be measured as well, fueling a never-ending hunger for data and leading to a self-propelling expansion of datafication regimes. But this simply moves the goalposts. Because the more precise the measurement, the narrower it is, creating a kind of point cloud vision. Yet, a full picture never emerges from an assembly of dots. It might from afar, but if one increases the resolution – zooms in, so to speak – the space between the dots is always vast and empty. And like in fractal geometry, there is no natural end to it.

Recessive Objects

Quantification creates what Sun-Ha Hong calls ‘recessive objects’, which are “things that promise to extend our knowability but thereby publicize the very uncertainty that threatens those claims to knowledge” (2020, 31). Recessivity, in some basic form, is part of all knowledge systems. Yet, Hong points out "specific forms of recessivity have distinctly political and ethical implications.” In this case, it's the open arbitrariness by which the line between what is known and what is there but unknowable, is established. It's what the machine gives you, no matter what might be there. A chasm opens up between the machinic vision of ourselves and the knowledge we derive from that and the everyday experience we invariably have of ourselves. The chasm is created by the fact that these measurements do not simply extend our senses, but confront us with an entirely different perspective, as technical sensors give us access to the world that lies beyond our own cognitive capacities (which, from the point of view of quantification, are faulty and untrustworthy to begin with).

In traditional large-scale systems, the potentially corrosive effects of recessivity have been limited by the trust we place in the institutions and the experts who run these systems. We, by and large, cannot but trust the military, which runs the missile defense system, to have a sufficiently robust understanding of which dot on the radar is a missile, and that everything that needs to be known in order to be able to act on that indicator is measured and available. Even if we know that such knowledge is never perfect, we trust that the institutions, through these large-scale technical systems, produce the best knowledge obtainable. Of course, trust in such institutions was never uniform or complete. Yet, for a long time, the paranoia created by not trusting and not-knowing remained limited to small, isolated groups and was directed outwards, towards the state. However, quantifying oneself is different. Because here each person is doing all the work for him-/herself. While, in principle, this could create another trust relation, because everything here is under direct personal control, in practice, the opposite happens for all but the most dedicated tinkerers. Complexity quickly becomes overwhelming, and the subjects who are called upon to know for themselves, are confronted with the impossibility of this task. Thus, there is a strong incentive to delegate this to new ‘experts’ who provide specialized hard- and software and the analytical tools to make sense of the data. The engineers at Fitbit (acquired by Google in November 2019)), we assume, know how to calibrate the sensors and interpret the data.

But this confronts us with the third unknowable, that is the machinery that creates the knowledge, and the question about who constructs it and for which purpose. If the revelations about the NSA by Edward Snowden, and about Cambridge Analytica, did anything beyond creating their own ‘recessive objects’, it was to popularize a deep suspicion against the very technologies through which our lives are organized, and the companies that create and provide these technologies to us. We all now assume that behind the stated functionalities and the official interfaces, there are functionalities and layers completely hidden from us. We know a few of them, but we also know that there are many more we don’t know and that what we know might be outdated by the moment we’ve come to know it. After all, government programs revealed by Snowden are, by now, at least seven years old and have most likely changed, not just because of technological progress, but also in reaction to their becoming public knowledge. And at least part of the reason why these layers and functionalities are hidden, we must assume, is because they work against us and consent would not be forthcoming if we had an option to withhold it. In other words, we know that we will never know what exactly is being done with our data, why it is being recorded (to improve our own health, or because it’s valuable to someone else?), if all the data that is recorded is also made available to us, and if there are any other uses of that data that might harm us in the future. If the analyst in a Freudian psychoanalysis session is studiously indifferent, we must now assume that new apparatuses, on which our self-knowledge relies, are veering unpredictably from being friendly toward us, to being outright hostile.

And from this, a new sense of self emerges. But not the one that the evangelists of ‘self-quantification’ promise – a self-transparent machine that can be endlessly tweaked and optimized – but something far darker. A self that can never be certain of itself, that cannot trust itself, that must assume that what is known about itself is unreliable – constantly on the lookout against itself. In other words, the paranoia that used to be directed outwards becomes directed inwards as well.

The Paranoid Self

‘Paranoia’, the mental health website of the British government states, “is thinking and feeling like you are being threatened in some way, even if there is no evidence, or very little evidence, that you are.” The list of symptoms of ‘paranoid personality disorder’, provided by the US government’s MedlinePlus, begins with, “concern that other people have hidden motives [and] thinking that [one] will be exploited (used) or harmed by others”. In clinical definitions the assessment of paranoia is based on the difference between having generally accepted evidence for others to have motives that they are trying to hide or to exploit one secretly – then such an attitude would be justified distrust – and having no accepted evidence – then this attitude is a ‘personality disorder’ based on delusions.

While it is understood that the boundary between what counts as evidence is subject to reasonable debate, it is assumed that the outer edges of the spectrum between distrust and paranoia are mutually exclusive. One cannot have solid evidence and no evidence about something at the same time. In a world of recessive objects, this is no longer the case. On the contrary. The very processes of knowing create the precondition of ‘not-knowing’, though not in the sense of simply extending the knowledge to a new frontier, but of bringing into view, paradoxically, that which cannot be known at all.

Self-quantification, then, does not finally provide solid self-knowledge but destabilizes the self on three accounts. First, on epistemological grounds, the relationship between the world to be measured and the data created by acts of measurement remains ambiguous at best. Data, far from being objectively correct and clear (or objectively false and to be corrected in the next iteration of the measuring process), remains ever ambiguous, in need of interpretation and contextualization before it can yield real information, let alone knowledge.

Second, on phenomenological grounds, the very precision of quantitative knowledge claims is based on their reduction of complex situations to measurable indicators. And with every reduction, there are things to be left out, and, paradoxically, the more one looks into it, the larger the area not covered becomes.

Third, on economic/political grounds. As we come to rely more and more on these infrastructures for knowing the world, as more and more data is being created, analyzed, and acted upon, the more the chasm widens between what we can know ‘for ourselves’ and what machines tell us about ourselves. The only way this chasm can be bridged is by trust. By the assumption, to the best of our abilities, that what the machines tell us is correct, and created and analyzed in the interest of the person of whom this data is about. But this trust is constantly eroded, not just by the generally exploitative character of data regimes under ‘surveillance capitalism’ and the ‘black boxing’ of technologies (Stalder 2019), but by the exceptional power differentials between those who provide the technologies and the rest of society. This power differentials have become so massive that it’s simply impossible for end-users to evaluate critically the sense of self they gain from these infrastructures. In a way, all complex machines are opaque to their users.

Most people don’t know much more about, say, their cars, than what is revealed by the interfaces provided to them. But if need be, they can always get their car to a mechanic to whom the machine is transparent. What is new is that now nobody can evaluate the technologies provided to them. Not only because they are so complex, but because the power of the companies and their owners overwhelm both civil society’s ability to do independent research, and the state’s ability to provide effective oversight (Doctorow 2020). These issues are not restricted to self-quantification. However, it is through these technologies that they become embedded in our very sense of self. Together, all of this creates a new type of self which is at once overconfident in its knowledge, and deeply suspicious about what lurks in the recesses of the ‘known unknowables’. Socrates’ famous quip, “I know that I know nothing”, takes on an all-new meaning.

More Data Will Not Solve the Problem

More data will not help to counteract this. Rather, the different types of unknowables need to be addressed differently. On the epistemological dimension, data needs to be understood as irreducibly ambiguous and we need to develop mechanisms to negotiate this ambiguity, rather than repress it through more and more complex methods of data science. Outside of technological systems, we are quite capable of living with ambiguity. Indeed, as Mireille Hildebrand (2016) has argued, ambiguity is an important source of human freedom, as it is from ambiguity that the need for value-based decision-making arises. If multiple things are equally plausible, then we need to make the types of decisions that no algorithm ever could. Second, on the phenomenological level, the need for a diversity of knowledge systems becomes ever more evident.

Quantification has a tendency to devalue other, particularly embodied, implicit, and holistic systems of knowledge as imprecise or outdated, and to ignore them for not being machine-readable. Against this, the power, and also the limits of quantified knowledge, need to be understood more explicitly, and bridges to other systems of knowing strengthened, as well as new ones built. Third, the extreme power differentials between the providers of the technological infrastructures and the proprietary data-driven services, is, to a large degree, an effect of monopoly capitalism.

The recent debates on how to bring anti-trust law to bear on the ‘free services’ provided by the new monopolists (Wu 2018), and the fact that regulators in the US and the EU have begun to take potentially significant anti-trust action, is a good sign that the problem is being recognized, although not yet enough to address this issue. As long as these issues remain, the fear of the ‘known unknowables’ – partly justified, partly paranoid – will be installed at the core of the quantified personality.

Bibliography

Catlaw, Thomas J., and Billie Sandberg. 2018. “The Quantified Self and the Evolution of Neoliberal Self-Government: An Exploratory Qualitative Study.” Administrative Theory & Praxis 40 (1): 3–22. https://doi.org/10.1080/10841806.2017.1420743.

Chomsky, Noam. 1971. “The Case Against B. F. Skinner.” New York Times Review of Books (December 30), 1971. https://chomsky.info/19711230/.

Doctorow, Cory. 2020. “How to Destroy ‘Surveillance Capitalism.’” Medium. August 26, 2020. https://onezero.medium.com/how-to-destroy-surveillance-capitalism-8135e6....

Erickson, Paul, Judy L. Klein, Lorraine Daston, Rebecca M. Lemov, Thomas Sturm, and Michael D. Gordin. 2013. How Reason Almost Lost Its Mind: The Strange Career of Cold War Rationality. Chicago ; London: The University of Chicago Press.

Fuller, Matthew. 2018. How to Sleep: The Art, Biology and Culture of Unconsciousness. Lines. London ; New York: Bloomsbury Academic.

Gitelman, Lisa, ed. 2013. “Raw Data” Is an Oxymoron. Cambridge, Massachusetts ; London, England: The MIT Press.

Hammond, Claudia. 2019. “Do We Need to Walk 10,000 Steps a Day?” bbc.com (29.07.). 2019. https://www.bbc.com/future/article/20190723-10000-steps-a-day-the-right-....

Hildebrandt, Mireille. 2016. “Law as Information in the Era of Data-Driven Agency: Law as Information.” The Modern Law Review 79 (1): 1–30. https://doi.org/10.1111/1468-2230.12165.

Hong, Sun-ha. 2020. Technologies of Speculation: The Limits of Knowledge in a Data-Driven Society. New York: New York University Press.

Lupton, Deborah. 2016. The Quantified Self: A Sociology of Self-Tracking. Cambridge, UK: Polity.

Moore, Phoebe, and Andrew Robinson. 2016. “The Quantified Self: What Counts in the Neoliberal Workplace.” New Media & Society 18 (11): 2774–92. https://doi.org/10.1177/1461444815604328.

Morozov, Evgeny. 2013. To Save Everything, Click Here: The Folly of Technological Solutionism. New York, NY: PublicAffairs.

Neff, Gina, and Dawn Nafus. 2016. Self-Tracking. The MIT Press Essential Knowledge Series. Cambridge, Massachusetts: The MIT Press.

Stalder, Felix. 2019. “The Deepest of Black. AI as Social Power / Das Tiefste Schwarz. KI Als Soziale Macht.” In Entangled Realities Living with Artificial Intelligence/ Leben Mit Künstlicher Intelligenz, edited by Sabine Himmelsbach and Boris Magrini, 125–47. Basel: Christoph Merian Verlag.

Wallace, Lane. 2010. “The Illusion of Control.” The Atlantic. May 26, 2010. https://www.theatlantic.com/technology/archive/2010/05/the-illusion-of-c....

Wolf, Gary. 2010. “The Data-Driven Life.” New York Times Magazin (April 28), 2010. https://www.nytimes.com/2010/05/02/magazine/02self-measurement-t.html.

Zaretsky, Eli. 2005. Secrets of the Soul: A Social and Cultural History of Psychoanalysis. New York: Vintage.