This is my contribution to the catalogue for the exhibition "Entangled Realities – Living with Artificial Intelligence" showing at HEK, Basel 09.05.2019 - 11.08.2019.

In day-to-day life, most technologies are black boxes to me.1 I don’t really know how they work, yet I have a reliable sense of the relationship between the input, say pressing a button, and the output, the elevator arriving. What happens in between, whether simple local circuitry or a far-away data centre is involved, I don’t know and I don’t care. Treating complex systems as black boxes is a way of reducing complexity and this is often a very sensible thing to do. However, not all black boxes are equally black, and the depth of the blackness matters quite significantly, not the least in terms of the power relations produced through the technology. The application of artificial intelligence has a tendency to produce particularly dark shades of black. In order to find ways to deal with these applications so that they do not undermine democracy, it is important to differentiate between technical and social shades to avoid that these applications contribute further to an already high concentration of power in the hands of a few technology firms. Art, with its unique ability to create new aesthetics, languages and imaginations, can play an important role in this battle.  Jenna Sutela, nimiia cétiï, 2018

Jenna Sutela, nimiia cétiï, 2018

Into the Box

Let’s step into the elevator box. It’s not dark at all. Rather, its blackness is extremely shallow, a question of convenience, created by my lack of technical understanding, access, and, most importantly, interest. For the technicians who design and repair the elevator, it is not a black box at all. Their knowledge, access, and interest turn the elevator into a fully readable system in which all parts are known. In other words, they can turn the black box transparent at any time.

The old kind of black

As the technologies become more complex, the blackness of the box gets deeper. There is no single person or company who understands, say, a personal computer in full. Even for the technicians who understand one component in detail, the computer is full of other components (hard- and software) of which they only understand the relationship between input and output, but not how exactly this relationship is created.2 But still, in theory, every single component is understandable to someone and if need be each of the many black boxes could be made transparent. This is important in the context of security audits and determination of liabilities.3 In most practical situations transparency is not necessary. Particularly, and importantly, as long as the input/output relation remains stable. Input A always generates output B. If suddenly input A generates output C, I am alerted that the machine might be broken (for example, by a software bug) or has otherwise been compromised. This predictability gives me, as the human operator, autonomy in respect to the machine. As a non-deterministic person I can exercise a certain degree of control over the deterministic machine, even if I don’t understand how the machine works.4

The new kind of black

In respect to complex systems with artificially intelligent components, the character of the black box is radically different. It is no longer a question of my relationship to the system, a convenient strategy to reduce complexity by concentrating on a stable input-output relationship, but blackness is now a question of ontology. It relates directly to how these technologies exist in the world, how they are designed and how they operate. In an increasing number of instances, particularly those involving machine learning, there are elements within the complex system that are non-transparent even to the people who built the system, let alone those who use them.

This deeper shade of black has been created by adding multiple layers of coating, so to speak. First, the programmers only set the initial parameters and the goal towards which the learning process is optimized, say, recognizing cats in images. The internal structure of the solution that emerges from an iterative process of “learning” – selecting that approach which moves, for unknown reasons, the output closer to the predefined goal – is unreadable even to those who define the start- and endpoint. They cannot determine whether the solution thus created is the best one possible, or one that is simply good enough, satisfying the criteria specified at the outset, say a success rate of 95% in recognizing cats in images. Second, if it cannot really be known why it reaches a certain result, then it can also not be known if the results generated “at scale”, in the world at large, are as accurate as the results achieved based on the controlled training data.5 And the training data needs to be controlled otherwise the “learning” – the improvement in relation to a predefined function – cannot be assessed. In the case of simple image recognition, both false positives (labelling a dog as a cat) and false negative (failing to label a cat when there is one) are detectable by humans because AI essentially tries to mimic human cognitive capacities. It simply scales them up to non-human speed. But it still allows comparing the output of the AI system to the output of another system, say, a person looking at the pictures. But such comparisons between methods are not always possible and this creates a third reason why such systems come with a deeper shade of black.

AI systems are tasked with solutions to problems that humans could not solve themselves, say assessing insurance risk based on social media data.6 Also here, there is a stage of training: feeding large quantities of social media data about people who have a known insurance history and then trying to find a model that will “predict” this known history based on the data. The goal here is not elusive “perfect knowledge” but simply higher accuracy than the previous model which treated all young drivers as higher risk, based on their lack of experience and an assumed propensity of youth to do risky things. Once it has improved the predictive capacity of the previous model, there is a business case for employing the new AI-derived model, no matter how many false positives (people who get unjustly hit with a higher premium) or false negatives (people whose risk is underestimated) the system produces. But only over time, and with frequent feedback and testing, can be assessed, whether the predictions were “correct”. As it turns out, often they are not, though this knowledge reaches rarely those affected by it.7 Independent from the question of the accuracy of AI processes, from the point of view of the person who has his or her insurance rate set by such a system, the decision appears arbitrary. Unknown rules are applied in a nontransparent and unpredictable manner, creating results that cannot be challenged. It’s the opposite of “due process”, a fundamental concept to ensure the fairness of (legal) decision making.8 It is simply a verdict without justification or process of appeal. As long as there is a competitive market place, there are at least several such verdicts to choose from. But of course, not all aspects of life – not even in the economy – can, or should be, organized as competitive market places.

Finally, the single deepest layer of black stems from the fact that these boxes are no longer predictable in the sense that the same input always produces the same output. As adaptive systems, their internals change all the time. While this is not strictly an issue of artificial intelligence – the Google search algorithm changes all the time, due to its continuous development involving a large number of people – it’s one of the core characteristics of such systems that they can “learn” and “adapt” as the environment changes. In other words, it’s a feature, not a bug that the relationship between input and output is unstable. While increasing in many cases their usefulness, their unpredictability further reduces the legibility of the system since it is much more difficult to draw inferences from one instance of its application to the next. Such systems become, essentially, “uninterpretable”.9 This makes the boxes very black indeed. Per se, this is neither good nor bad, since this is simply an ontological feature of these types of technologies. However, technologies never exist by themselves, rather, they are always deeply shaped by the institutional environment through which they are brought into being and whose agendas they are employed to extend. There are no such things as autonomous technologies. Period.10 Even those who can make evaluations and decisions themselves, they are always embedded in larger settings that enable them to make decisions in the first place and that are circumscribed by the other actors that make up this environment.

The general public often encounters new technologies first when they are developed within university settings, hence the cultural values and agendas that are prevalent in this environment – transparency, self-organization, peer communication, access to knowledge, learning, absence of the profit motive, etc. – are often very prominent in envisioned applications of the new technologies. This contributes to generating enthusiasm by early adopters who often misread such humanist values as essential features of the technologies, extrapolating them across all contexts of their possible application.

Division of learning and the division of power

Yet, the values and agendas that the technologies are embodying can change quite dramatically as they move out of the university/research setting and become embedded into dominant social institutions. Today, the dominant social institutions (particularly in the US, where most Western AI development takes place) are large-scale corporations.11 They alone have the resources and the knowledge to develop and employ AI at a scale where it affects society at large and the application of this technology largely advances their interests. Shoshanna Zuboff recently described the cumulative effect of the implementation of advanced digital technology almost exclusively in the interests of large corporations as creating “surveillance capitalism”,12 a system of constant extraction of behavioural data in order to predict and intervene in people’s life choices so that their behaviour can be optimized in relation to corporate strategies.

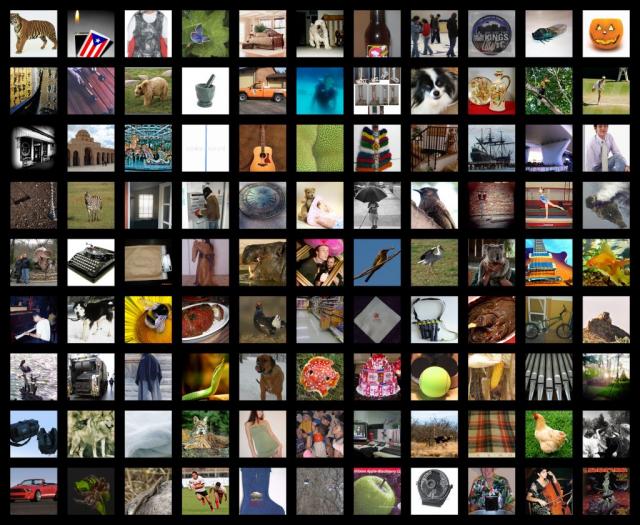

Trevor Paglen – Behold These Glorious Times!, 2017

Trevor Paglen – Behold These Glorious Times!, 2017

A core feature of this new system is what she calls a “new division of learning”, in which the ability to learn – despite all the slogans of the democratization of knowledge – is increasingly concentrated in the hands of these corporations. Only they have the means – the presence in our lives (through communication devices and the Internet of Things), the industrial infrastructure to collect and process vast quantities of data, the advanced technological knowledge to transfer that data into real-time knowledge – to accelerate their learning through artificial intelligence (or more generally, big data applications). And they not only have the means to generate knowledge but, through their presence in our lives, to act on that knowledge, say, by showing us particular kinds of information based on our query, giving us preferential treatment in commercial or social situations, and so on.

As we have seen, the structure of artificial intelligence tends towards the creation of very black boxes, for technical reasons. But in the real world of applied commercial systems, there is not only technological blackness, but there are additional layers added to it, as Frank Pasquale points out in his book Black Box Society.13 These layers are organizational, for example by buying up independent AI companies, hiring key personnel from universities where publishing of results is mandated, and centralizing applications in data-centres, and legal, making extensive use of proprietary licenses, and non-disclosure agreement and trade secrecy, effectively barring anyone involved in the development of these technologies from ever talking about it to outsiders. One could add even more “non-technical” layers than those mentioned by Pasquale. For example, design decisions which often emphasize “frictionlessness”, that is, ease-of-use by hiding complexity, leaving users, including professional users such as pilots, with very little understanding of how the machine works and how to intervene in case of trouble. These additional layers are deepening the black until it becomes a kind of Vantablack. Vanta stands for “Vertically Aligned Nano Tube Array” and is a material, developed in the UK in 2014, based on carbon nanotubes, that absorbs almost all light, thus creating the blackest black ever. Soon after, the artist Anish Kapoor bought, for an undisclosed sum, the exclusive license to use this material in art,14 effectively monopolizing this new means of expression through market mechanisms.

Against absolute power

Kapoor’s monopoly of expression can serve as a more general metaphor of the effect of Vantablack boxes in the real world. It is the return of the absolute power once held by kings and popes, who also had colours exclusive to them. In effect, the spread of the extra-black boxes threatens to roll back a history of democratization which was restarted in Europe with the adoption of the Magna Carta in England in 1215.15 With this kind of AI, the subjects (or, objects if you follow Zuboff) once again have to accept a verdict, based on unknown rules, applied in an unpredictable manner without any recourse to challenging the process or the outcome. And social effects are as to be expected. The exercise of absolute power benefits primarily those who are capable of exercising it, as we can see from the vast fortunes amassed by the core owners and managers of these companies as well as the increasing dependence of all aspects of life, from smart city management to personalized health care, on their services.

The point here is not to argue against AI. That would be both counterproductive because we need AI to manage today’s dynamism and complexity, and pointless because algorithmicity – machine processing of large quantities of information co-creating our contemporary world – has long become a part of our digital condition.16 The point here is, first, to acknowledge that AI presents a different kind of black box than those of conventionally complex apparatuses and, second, two insist that it’s the political economy of “really existing AI” that deepens and hardens the blackness of the AI box. The conclusion to take from this is that the solution to this problem of AI constituting a new form of power cannot be only technical. Left to its own, that is, within the existing techno-political framework, even laudable notions as such “explainable AI” – systems which “provide not only an output, but also a human-understandable explanation that expresses the rationale of the machine”17 – will simply help to make the engineers and their managers assess the systems better, without piercing the organizational, legal and design layers that make these systems so deeply black.

Thus, it is the legal, institutional and cultural layers that need to be adapted to address the tendency of AI systems to be developed and run as very black boxes. On the legal side, the most comprehensive approach today is the European General Data Protection Regulation (GDPR), which establishes for the first time a subject’s right to receive “meaningful information about the logic involved, as well as the significance and the envisaged consequences of such processing for the data subject.” This gives legal ground for Europeans to demand an explanation of the systems, that is, the logic of the model underlying the black box, as well as the institutional consequences that the system’s output generate. Unfortunately, this only relates to the ability of the subject to opt-out of having his or her personal data processed through such a system.18 Much stronger legal protections will need to be developed in order to limit the power of the institutions capable of developing and employing such systems.

As already stated, actually-existing technologies are always deeply shaped by the institutions that create and employ them. And it’s here that artists can play a particular role. It’s their unique ability to develop new languages, new aesthetics that makes accessible the dimensions of these systems that remain underdeveloped in strictly commercial settings or in disciplinary technical research. Works such as Trevor Paglen’s Behold these Glorious Times! can develop languages that allow us to see how deeply even highly technical and abstract processes depend on, and incorporate, historically and culturally specific assumptions, stripping away the veneer of objectivity that comes with mathematical language. This allows for a necessary, open debate about the kinds of biases society wants to implement into the technologies and how to account for them, rather than simply accepting often unconscious biases and the questionable rationales of very small groups of people. But it’s not just reflexivity that art can provide here. Given the relatively narrow set of goals that AI is optimized towards for commercial reasons and ease-of-use, there is a whole range of applications and experiences that are left unarticulated. There is a vast unknown field, lacking language and experiences, of what AI systems could do if they were not hemmed in by the bottom line, but rather were focussed on finding new ways for different life-forms to co-inhabit the world, as in Jenna Sutela’s work nimiia cétii.

Blacker than black

And sometimes it’s even possible to create alternative modes of production that counter the tendency for the concentration of the new division of learning. When Anish Kapoor bought the exclusive right to the Vantablack, the British artist Stuart Semple, who had been making his own colours for more than 20 years, became so incensed by this new monopoly of a means of expression that he decided to create his own black, even blacker than vantablack. For him, this was an issue of the freedom of art, and, even more, of the freedom of expression. How many artworks would never be realized because of Kapoor’s monopoly? We will never know. After quite a bit of research, he succeeded and created a new Black 3.0, the blackest black ever! In order to produce it and sell it to artists around the world at very affordable prices, he launched a crowdfunding campaign, which raised close to twenty times the amount needed.19

What this example shows is that commercial monopolies, or the “new division of learning” are not inevitable, but the consequences of institutional design. Artists do not just imagine a different world, but their articulation can set in motion powerful processes to bring this different world into being. In the case of AI systems more aligned with democratic values, it will take more than a clever Kickstarter campaign, but without an articulation of a new aesthetic, a new relationship to and purpose for these technologies, no alternative will be even imaginable, let alone realizable.

Cite as: Stalder, Felix (2019): „The Deepest of Black. AI as Social Power / Das tiefste Schwarz. KI als soziale Macht“, in: Himmelsbach, Sabine und Boris Magrini (Hrsg.): Entangled Realities Living with Artificial Intelligence/ Leben mit künstlicher Intelligenz, Basel: Christoph Merian Verlag, S. 125–147.

Endnotes

1 “In science, computing, and engineering, a black box is a device, system or object which can be viewed in terms of its inputs and outputs (or transfer characteristics), without any knowledge of its internal workings. Its implementation is ‘opaque’ (black). Almost anything might be referred to as a black box: a transistor, an algorithm, or the human brain.” https://en.wikipedia.org/wiki/Black_box.

2 Passig, Kathrin (2017): “Fünfzig Jahre Black Box”.

3 This is important, but it still doesn’t make such systems fully transparent, because it is often from the interaction of known parts that unknown effects occur. This is one of the reasons why it is so difficult to debug software.

4 This is, of course, a simplified account of the relationship between people and machines. Media theory, at least since Marshall McLuhan, has shown that the tools shape the users as much as the users simply use the tools.

5 See, Burrell, Jenna (2016): “How the machine ‘thinks’: Understanding opacity in machine learning algorithms”. Big Data & Society 3/1.

6 Ruddick, Graham (2016): “Admiral to price car insurance based on Facebook posts”. The Guardian online, 2.11.2016, https://www.theguardian.com/technology/2016/nov/02/admiral-to-price-car-insurance-based-on-facebook-posts (accessed 18.3.2019).

7 O’Neil, Cathy (2016): Weapons of math destruction: how big data increases inequality and threatens democracy. London: Allen Lane, Penguin Random House.

8 “All legal procedures set by statute and court practice, including notice of rights, must be followed for each individual so that no prejudicial or unequal treatment will result. While somewhat indefinite, the term can be gauged by its aim to safeguard both private and public rights against unfairness.” Due Process, Legal Dictionary. https://dictionary.law.com/Default.aspx?selected=595 (accessed 18.3.2019).

9 “Explainability” of a system refers to the ability to understand why a system produces from an Input A the output B. Truly black boxes are, per definition, not explainable. “Interpretability” refers to the ability to relate output B back to input A. This allows to interpret B as an effect of the cause A. The elevator arrives (B) because I pressed a button (A). In most everyday situations, interpretability of a system is enough, and we only need explanations when something unexpected happens.

10 A truly autonomous car would decide by itself where to drive. A driverless car’s task is to drive safely to the destination set by the user, along parameters defined by the mapping data.

11 Even the national security apparatuses are increasingly turning, and dependent on, these commercial firms for their operations. In November 2017, AWS (Amazon Web Services), Amazon’s cloud computing division, announced “AWS Secret Region”, a dedicated facility to handle highly classified information for the “U.S. Intelligence Community”. https://aws.amazon.com/blogs/publicsector/announcing-the-new-aws-secret-....

12 Zuboff, Shoshana (2018): The age of surveillance capitalism: the fight for a human future at the new frontier of power. New York: PublicAffairs.

13 Pasquale, Frank (2015): The black box society: the secret algorithms that control money and information. Cambridge: Harvard University Press.

14 The light absorbing capacity of this colour is so strong that in 2018 a visitor of the Museum Serralves in Porto fell into a work of Kapoor’s, a 2.5 metre deep hole coated with Vantablack, which looked effectively like a flat surface.

15 It’s not a coincident that it was the Magna Carta that first defined the principle of “due process” as a requirement of the legitimate exercise of authority. Initially, this extended only to the nobility, but, over time, was extended to all citizens.

16 Stalder, Felix (2018): The digital condition. Cambridge, UK; Medford, MA: Polity Press.

17 Doran, Derek / Schulz, Sarah / Besold, Tarek R. (2017): “What Does Explainable AI Really Mean? A New Conceptualization of Perspectives”. arXiv:1710.00794 [cs], http://arxiv.org/abs/1710.00794 (accessed 20.3.2019).

18 Burt, Andrew (2017): “Is there a ‘right to explanation’ for machine learning in the GDPR?”. The International Association of Privacy Professionals (IAPP), https://iapp.org/news/a/is-there-a-right-to-explanation-for-machine-lear... (accessed 20.3.2019).

19 As swipe at Kapoor, anyone wanting to buy the black had to “confirm that you are not Anish Kapoor, you are in no way affiliated to Anish Kapoor, you are not backing this on behalf of Anish Kapoor or an associate of Anish Kapoor. To the best of your knowledge, information and belief this material will not make its way into the hands of Anish Kapoor.” https://www.kickstarter.com/projects/culturehustle/the-blackest-black-pa....