One of the relatively little-discussed phenomena in the social critique of AI is the fact that it is not only a centralizing technology, but also one that increases the distance between people and the power of the owners of the coordinating infrastructure. Of course, there is no direct determinism of the infrastructure, but it matters in which way the playing field is tilted.

I think it's possible to look at the history of Internet infrastructure, particularly those aspects that users interact with, and see it as shaped by successive waves of centralization. Think Usenet/email (1980s), Web (1990s), Web2.0 (2000s), Web3 (2010s) and AI (2020s). The dates here do not indicate a historic periodization (they are way too neat), but a heuristic device useful for this particular purpose. So, when thinking of email, think of email in the 1980s (self-hosted) rather than contemporary Gmail (centrally-hosted).

In each of these waves, processing power moved from the edges of the network into the center. This is not always a bad thing, but it affects what is possible. For one, it lowers the barrier for entry for users (the web was clearly more user-friendly than Usenet), but it also adds power to whomever controls the central infrastructure. Hosting a discussion forum on your webserver provides the host with more control over the discussion than distributing a Usenet group. Again, this is not necessarily a bad thing when it comes to, say, design, technical improvements, or moderation. Decentralization (of certain technical aspects) is not a positive value per se and it's not incompatible with centralization (of other technical aspects).

Web2.0 centralized the publication and interaction infrastructure, massively increasing the power of the owners who became extremely wealthy in the process. This was placing users, locked into the walled gardens by the network effect and convenience, at the mercy of shifting business strategies. What was largely left intact, though, was the part that people were interacting with people, and leaving open the possibility that they would take their interaction elsewhere.

Web3 added more complexity to the middleware (blockchain), and true to the mantra of trustlessness, moved the interaction away from people towards pseudonymous wallets and "smart contracts". In effect, once on the blockchain, the interaction could never leave it. For some weird reasons, this was seen as a positive design feature.

AI, say ChatGPT, adds more complexity and power to the central node, Rather than only setting the rules of engagement (between users or between wallets), it also centralizes the engagement itself. People no longer interact with each other, but with they interact individually with the AI itself. Since the AI is personalized and generative (i.e. stochastic) no two interactions will ever be the same, further isolating user from each other. While the AI depends on user interaction and open sources (as training data) its practice kills both. Not only by focussing all user attention on itself but also by cutting all references to the underlying human-generated sources (and the social relations embodied therein). For AI, sources are dissolved into training data, no longer individual documents with meaning, contexts and histories, but dividual latent patterns.

AI does not refer beyond itself, thus effectively cutting off the possibility of human-to-human interaction. Imagine the Internet was nothing but ChatGPT. While you might get a nebulous feeling that there are other people out there, there would be no way of ever knowing what any one of them thinks or getting in contact with them. (It way BingChat references sources might mitigate this a little bit.)

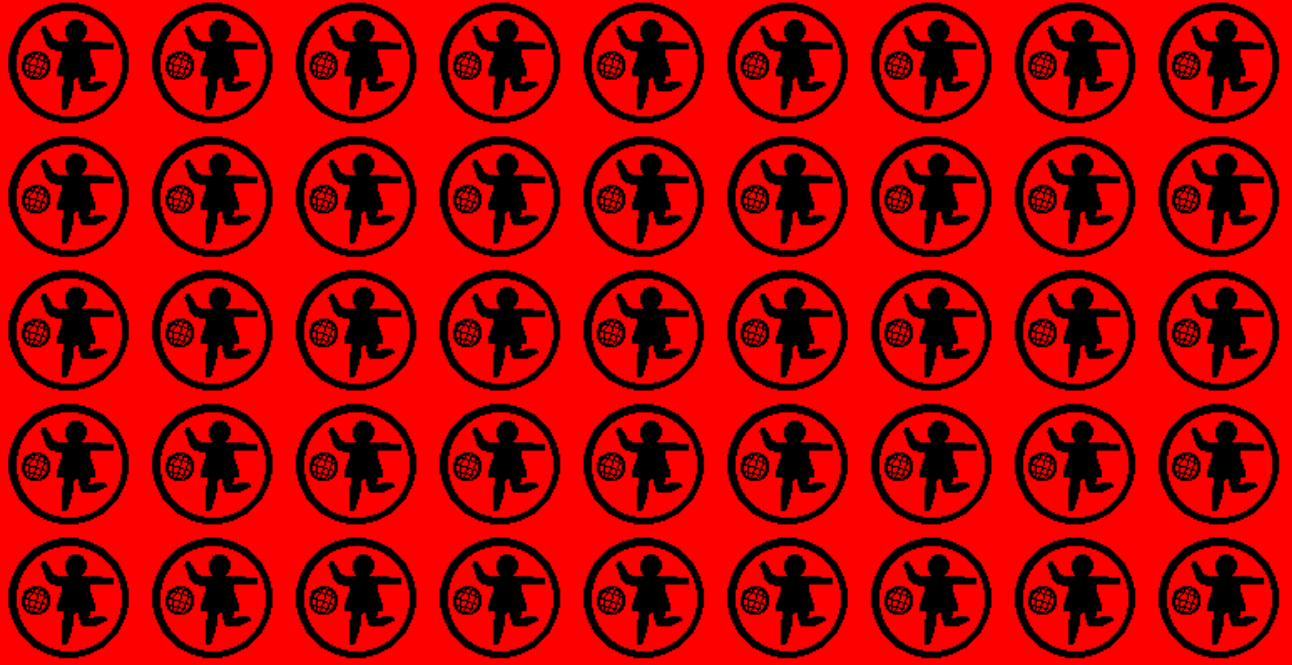

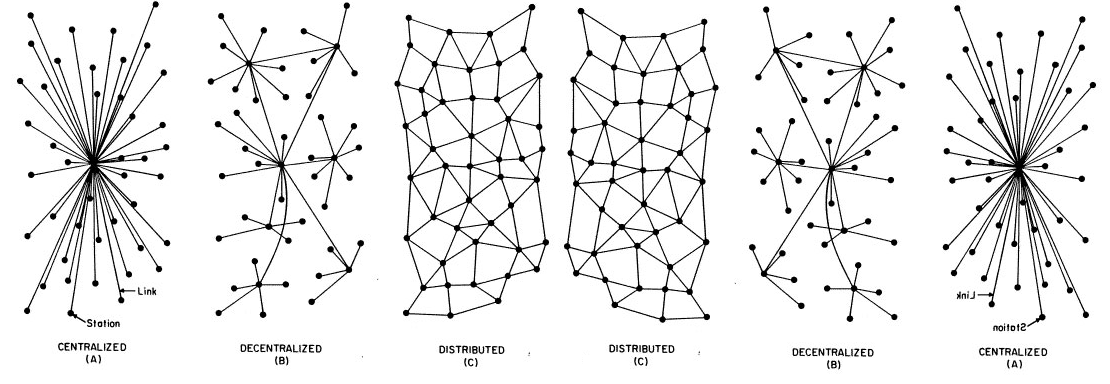

If we think of this in terms of network topoloy along the lines sketched by Paul Baran in 1964, we might say that we have come full circle, from the mesh network back to the star.

(All of this is independent of the argument that this type of AI is a "stochastic parrot".)

This post was first published on my hometown/mastodon account at tldr.nettime.org